|

Inseop Chung I'm a Staff Researcher at the AI Center of Samsung Electronics, where I work on various topics in computer vision and machine learning. I received my PhD from Graduate School of Convergence Science and Technology (GSCST), Department of Intelligence and Information of Seoul National University (SNU) under the supervision of professor Nojun Kwak. I was a member of the Machine Intelligence and Pattern Analysis Lab (MIPAL) led by Prof. Kwak. My research focuses on domain adaptation, domain generalization, test-time adaptation, object detection, semantic segmentation, knowledge distillation, and vision-language-action models. Email / CV / Google Scholar / LinkedIn / GitHub |

|

Research InterestsI'm broadly interested in computer vision, deep learning, and machine learning, with a current and primary focus on Vision-Language-Action (VLA) models for embodied AI and robotics. My goal is to build AI systems that can perceive, reason, and act robustly in dynamic, real-world environments. My recent research centers on making AI models more adaptive and generalizable to unseen scenarios through techniques such as domain adaptation, domain generalization, and continual test-time adaptation. I also have experience in knowledge distillation, object detection, and semantic segmentation. Currently, I'm particularly passionate about advancing VLA models that integrate vision and language with action understanding for real-world robotic control. I actively work with open-source VLA frameworks such as OpenVLA, Physical Intelligence (PI0), NVIDIA Isaac GR00T N1.5 models, and have hands-on experience in adapting and extending these platforms for specific robotic manipulation tasks. My long-term interest lies in developing scalable and generalizable embodied intelligence — enabling agents that can learn continuously from diverse environments, understand natural language instructions, and perform complex physical tasks with minimal supervision. |

Publications |

|

Mitigating the Bias in the Model for Continual Test-Time Adaptation

Inseop Chung, Kyomin Hwang, Jayeon Yoo, Nojun Kwak IEEE Access, 2025.Aug arXiv This work improves Continual Test-Time Adaptation by introducing class-wise feature clustering and source-aligned prototype anchoring. It dynamically reduces prediction bias and enhances model robustness under distribution shifts. |

|

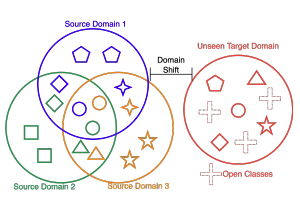

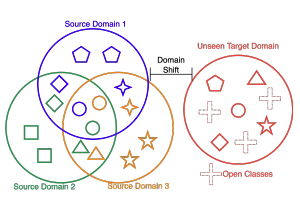

Open Domain Generalization with a Single Network by Regularization Exploiting Pretrained Features

Inseop Chung, Kiyoon Yoo, Nojun Kwak ICLR 2024 Workshop, 2024.May arXiv We propose a single-network method for Open Domain Generalization that improves unseen domain generalization and unknown class detection. It leverages a linear-probed head and two regularization terms to refine the classifier while preserving pre-trained features, enabling efficient and robust generalization. |

|

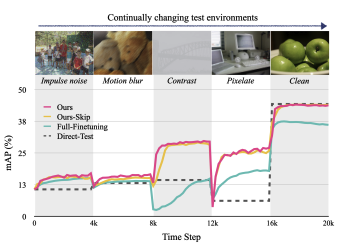

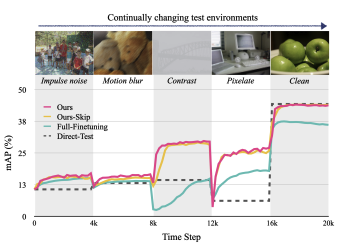

What, How, and When Should Object Detectors Update in Continually Changing Test Domains?

Jayeon Yoo, Dongkwan Lee, Inseop Chung, Donghyun Kim, Nojun Kwak CVPR, 2024.Jun arXiv We propose a lightweight, architecture-agnostic test-time adaptation method for object detection under continual domain shifts. By updating only small adaptor modules and introducing a class-wise feature alignment strategy, our approach achieves efficient, robust adaptation without modifying the backbone, improving mAP on standard benchmarks while preserving real-time performance. |

|

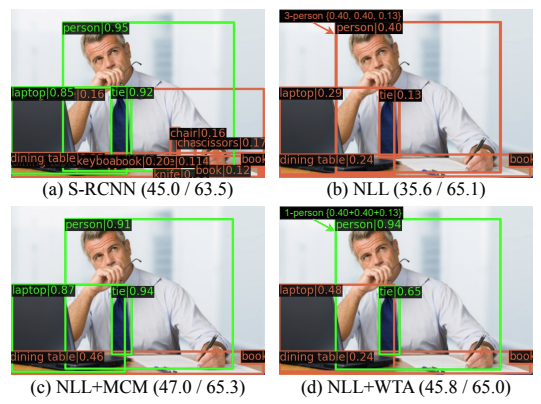

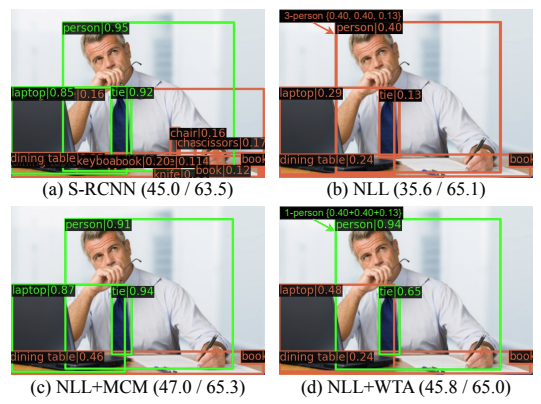

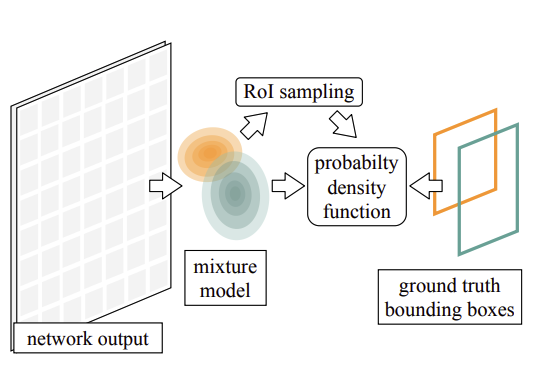

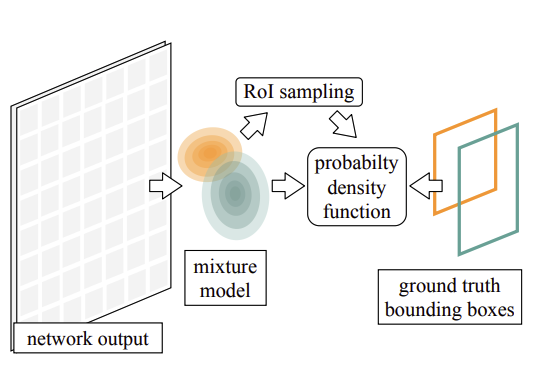

End-to-End Multi-Object Detection with a Regularized Mixture Model

Jaeyoung Yoo*, Hojun Lee*, Seunghyeon Seo, Inseop Chung, Nojun Kwak ICML, 2023.Jul arXiv We introduce D-RMM, an end-to-end multi-object detector trained with negative log-likelihood and a novel regularization loss. By modeling detection as density estimation, our method removes training heuristics, improves confidence reliability, and outperforms prior end-to-end detectors on MS COCO. |

|

|

Exploiting Inter-pixel Correlations in Unsupervised Domain Adaptation for Semantic Segmentation

Inseop Chung, Jayeon Yoo, Nojun Kwak WACV 2023 Workshop (Best Paper Award), 2023.Jan arXiv We enhance UDA for semantic segmentation by transferring inter-pixel correlations from the source to the target domain using a self-attention module. Trained only on the source, this module guides the segmentation network on the target domain beyond noisy pseudo labels, improving performance on standard benchmarks. |

|

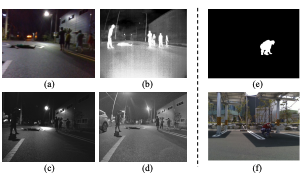

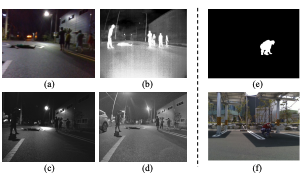

X-MAS: Extremely Large-Scale Multi-Modal Sensor Dataset for Outdoor Surveillance in Real Environments

DongKi Noh, Chang Ki Sung, Taeyoung Uhm, Wooju Lee, Hyungtae Lim, Jaeseok Choi, Kyuewang Lee, Dasol Hong, Daeho Um, Inseop Chung, Hochul Shin, Min-Jung Kim, Hyoung-Rock Kim, Seung-Min Baek, Hyun Myung IEEE Robotics and Automation Letters (RA-L), 2023.Jan We introduce X-MAS, a large-scale multi-modal dataset for outdoor surveillance with 500K+ annotated image pairs across five sensor types. |

|

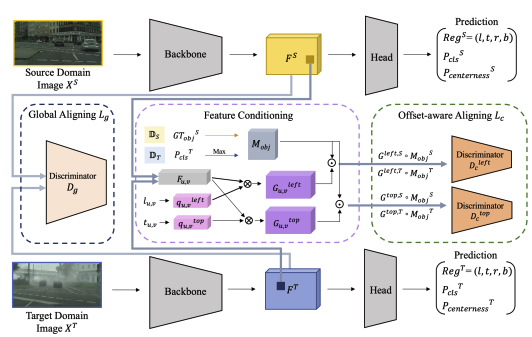

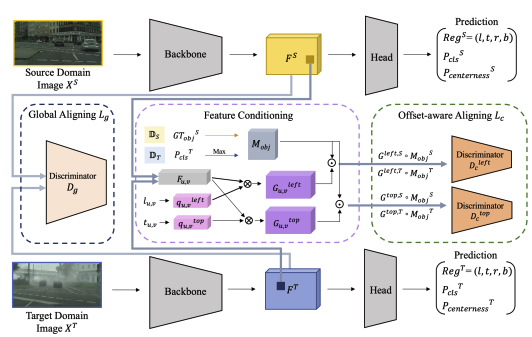

Unsupervised Domain Adaptation for One-Stage Object Detector Using Offsets to the Bounding Box

Jayeon Yoo, Inseop Chung, Nojun Kwak ECCV, 2022.Oct arXiv We propose OADA, an offset-aware domain adaptive object detector that conditions feature alignment on bounding box offsets to reduce negative transfer. This simple yet effective method improves both discriminability and transferability, achieving state-of-the-art results in domain adaptive detection. |

|

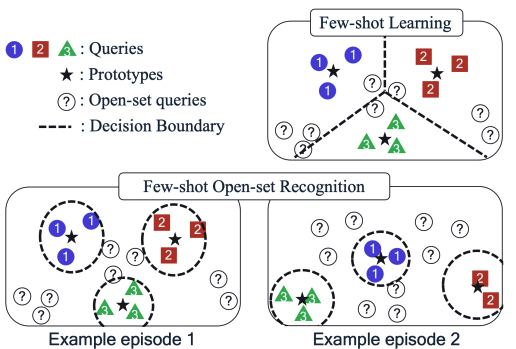

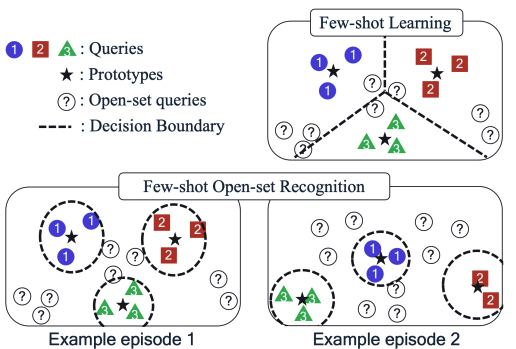

Dummy Prototypical Networks for Few-Shot Open-Set Keyword Spotting

Byeonggeun Kim, Seunghan Yang, Inseop Chung, Simyung Chang INTERSPEECH, 2022.Sep arXiv We propose D-ProtoNets, a metric learning-based method for few-shot open-set keyword spotting. Using dummy prototypes, our approach effectively rejects unknown classes and outperforms recent FSOSR methods on both splitGSC and miniImageNet benchmarks. |

|

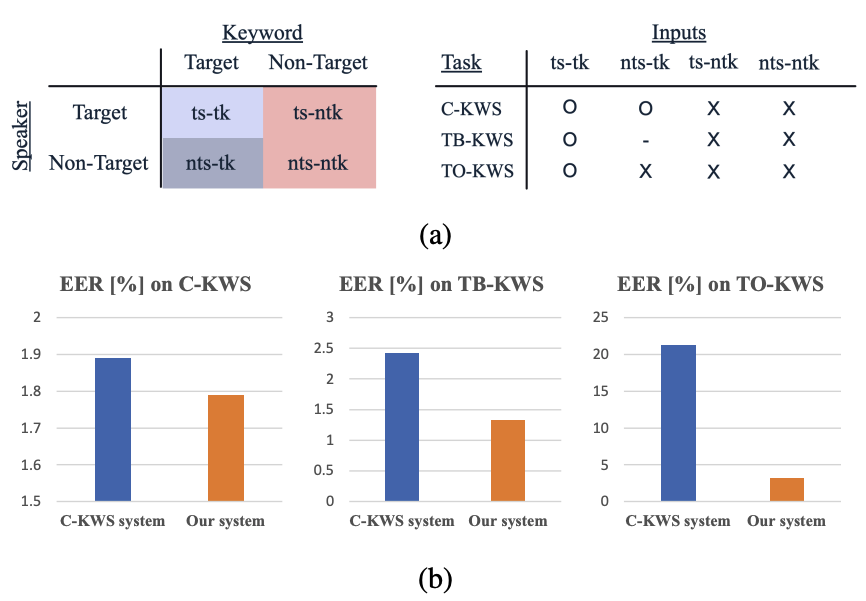

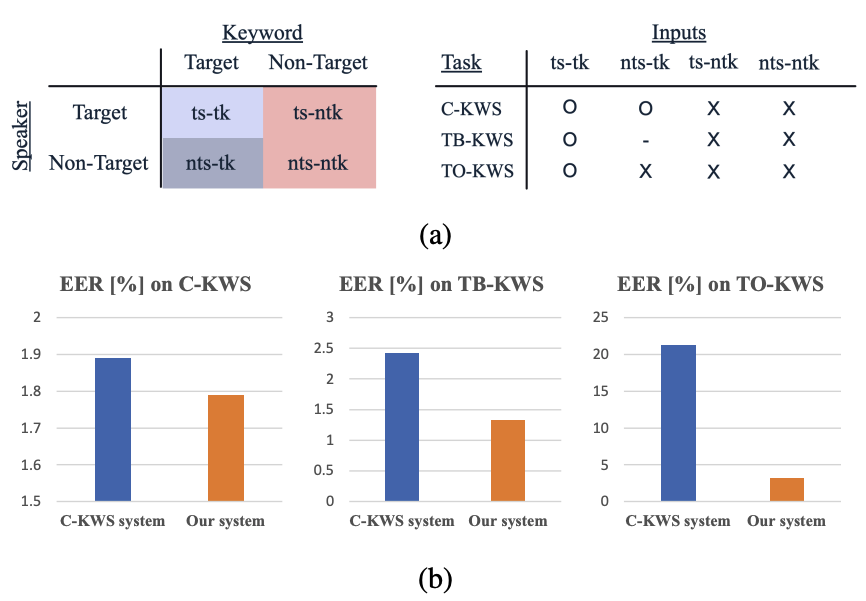

Personalized Keyword Spotting through Multi-task Learning

Seunghan Yang, Byeonggeun Kim, Inseop Chung, Simyung Chang INTERSPEECH, 2022.Sep arXiv We propose PK-MTL, a multi-task learning framework for personalized keyword spotting that jointly learns keyword spotting and speaker verification. |

|

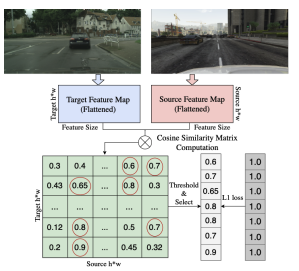

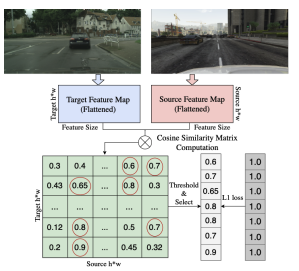

Maximizing Cosine Similarity Between Spatial Features for Unsupervised Domain Adaptation in Semantic Segmentation

Inseop Chung, Daesik Kim, Nojun Kwak WACV, 2022.Jan arXiv We present a cosine similarity-based method for unsupervised domain adaptation in semantic segmentation. By aligning source and target features via a class-wise feature dictionary and similarity maximization, our approach reduces domain gaps and improves performance on standard UDA benchmarks. |

|

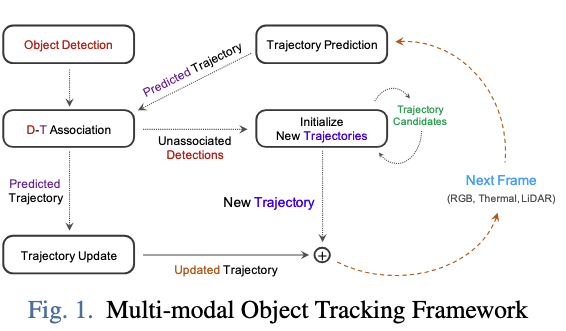

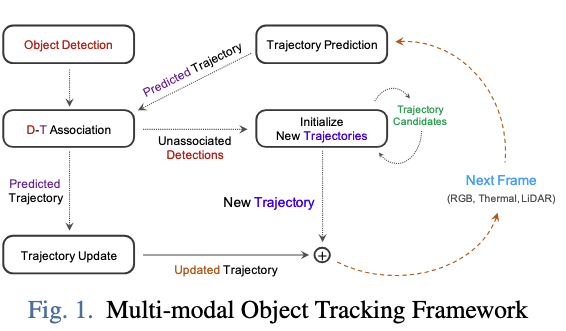

Multi-modal Object Detection, Tracking, and Action Classification for Unmanned Outdoor Surveillance Robots

Kyuewang Lee*, Inseop Chung*, Daeho Um, Jaeseok Choi, Yeji Song, Seunghyeon Seo, Nojun Kwak, Jin Young Choi ICCAS, 2021.Oct We present a real-time multi-modal framework for outdoor robots that detects, tracks, and classifies human actions using RGB, thermal, and LiDAR data. |

|

Training Multi-Object Detector by Estimating Bounding Box Distribution for Input Image

Jaeyoung Yoo, Hojun Lee*, Inseop Chung*, Geonseok Seo, Nojun Kwak ICCV, 2021.Oct arXiv We propose MDOD, a novel object detector that formulates multi-object detection as bounding box density estimation using a mixture model, simplifying training and improving performance. |

|

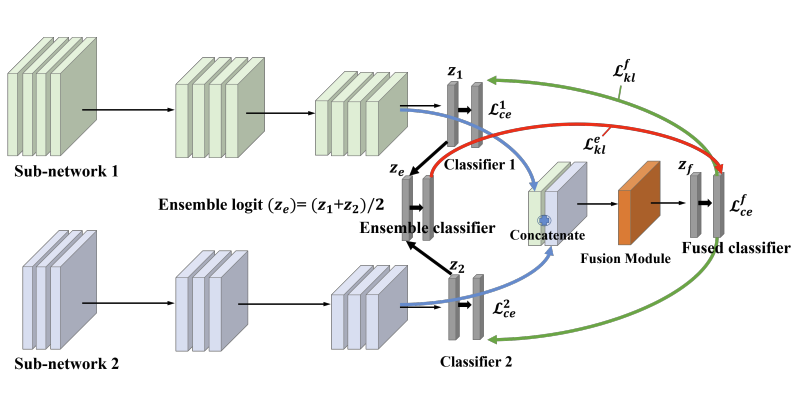

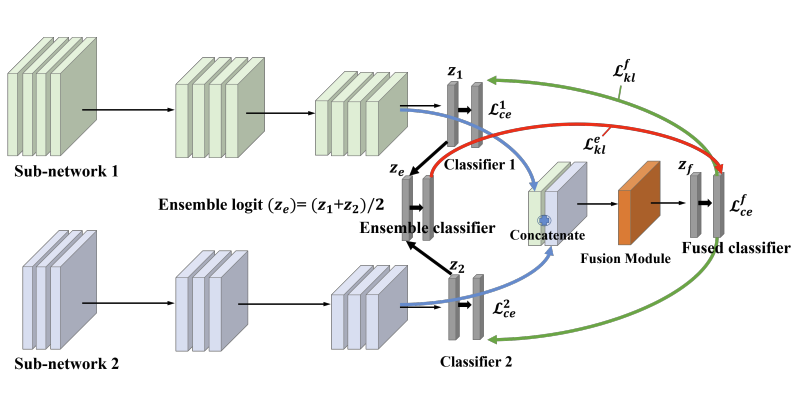

Feature Fusion for Online Mutual Knowledge Distillation

Jangho Kim, Minsugn Hyun, Inseop Chung, Nojun Kwak ICPR, 2021.Jan arXiv We propose Feature Fusion Learning (FFL), a mutual knowledge distillation framework that combines features from diverse sub-networks to train a stronger fused classifier, improving both overall and individual network performance. |

|

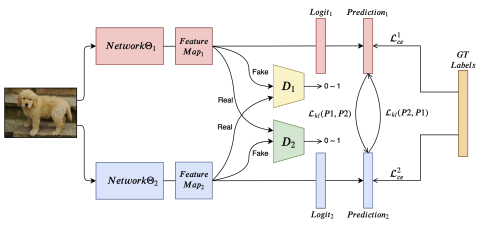

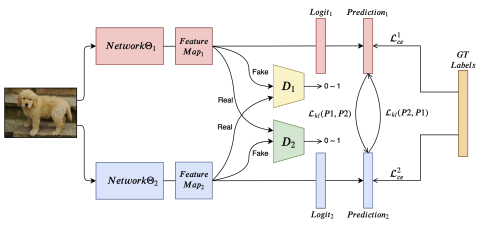

Feature-map-level Online Adversarial Knowledge Distillation

Inseop Chung, SeongUk Park, Jangho Kim, Nojun Kwak ICML, 2020.Jul Proceedings We propose an online knowledge distillation method that aligns feature maps via adversarial training, enabling networks to learn from each other's internal representations and improving performance, especially in mixed-size network setups. |

Education |

|

|

Ph.D. in Engineering, Seoul National University, 2019–2024 |

|

B.S. in Creative Technology Management & Computer Science, Yonsei University, 2012–2019 |

Work Experience |

|

|

Staff Researcher, Samsung Electronics, 2024.Mar–Present |

|

Research Intern, Qualcomm AI Research Korea, 2022.Jan–2022.Jul |

|

Research Intern, Naver Webtoons Corp., 2020.Jun–2020.Dec |

PatentsDomestic Patents

International Patents

|

Funding

|

Honour & Awards

|